Google Summer of Code 2009 Guidelines (2009/04/23)

If you are not a participant in the 2009 Google Summer of Code program, you can safely skip to the next section; there’s not much interesting stuff for you here.

Otherwise, welcome, and congratulations on making it into the Plan 9 program for the 2009 GSoC. We received over 50 applications and were only able to accept 7 of them. If you have not done so yet, take a moment to send your mentor(s) an email, introducing yourself. These are the people who will be guiding you through the next few months, so it’s good to have an open communications channel.

Communication

We are expecting students to embrace all means of communication during this period, and as such, we’ve created several means by which you can get in touch with us:

Via your blog. You will be provided with access to blog about your SoC program progress via cat-v.org. Status reports should be posted here first.

Via the Plan 9 GSoC mailing list. Communicate with students, mentors, and others interested in the Plan 9 GSoC program at: http://groups.google.com/group/plan9-gsoc. Your status reports should be submitted publically, here. This will work well since they should be well-formatted text, using markdown.

Via IRC. Many mentors and students are available for real-time discussion at #plan9-gsoc on irc.freenode.net. We expect you to be online on IRC while you are working on your project. This will allow us to help you very quickly with simple questions, and reduce overall load for all mentors. (In addition to this, the ability to have real-time discussions about your project is fun, and brings together a sense of community).

Private communication via email is discouraged. Unless you’ve got something that is embarassing / private that is holding you back, we’d prefer that you default to the public communication media above.

Progress Reports

Progress reports must be entered into your blog weekly. Your blog will be formatted in markdown, so these blog posts should be well-formatted text, and perfect for emailing to the plan9-gsoc list as well. After these reports, you and your mentor must convene to discuss them and any outstanding issues. These reports should detail where you are with your project, where you expect to be by the next week, and any blocking issues you are facing (especially if they may place a delay on your project).

This weekly status update is the minimal requirement – you may of course contact your mentor or blog or email more than once per week, should you feel the need. The mentors and the community at large are there to help you through your project for the summer, so don’t be ashamed to ask questions!

The day to update your blog is Monday between 12:00 and 18:00UTC.

Community Bonding Period (20 April - 23 May)

During the community bonding period, we will be expecting you either to be completing tasks assigned by your mentor to familiarize yourself with the environment in which you will be working, or doing preliminary work on your project (if you are already very familiar with Plan 9). This preliminary work would include design and architectural decisions. During this period, you should be discussing any questions you have about Plan 9 or your project with your mentor. Additionally, you should be actively discussing the architecture of your project with your mentor.

During this time, you will also be set up with access to a version control repository and blog. Unless your project requires otherwise, we encourage you to perform your developmental tasks on an actual Plan 9 system or virtual machine. Examples of exempt requirements are development in Inferno, or working on components for other operating systems (e.g. Glendix, vx32/9vx for Windows). IMPORTANT: All source code must be kept in this public repository, and all development for your project should be committed to the repository as it is available. No excuses.

Interim Period (24 May - 6 July)

Weekly status updates should be sent to your mentor(s). As specified above, these reports should contain your progress for the week, and where you expect to be by the next week. If you have any questions, pose them here. If you are falling behind schedule, let your mentor know what issues you are experiencing, and try to work out a way to catch up.

Midterm Period (6 July to 13 July)

Mentors and students should work together to determine specifics on their mid-term evaluations. We expect development to continue through this phase, and a weekly report will be expected here, too.

Interim Period (13 July - 10 August)

This period of development follows the 24 May - 6 July Interim Period schedule as far as expected work and communication.

Pencils Down Period (10 August - 17 August)

Remove bugs, clean code, discuss the project with your mentor. You should not plan to be using this time for your project, though it is not expressly forbidden. At the end of this period, you’ll be asked to take a survey about the experience, to provide the mentors feedback with how you felt about the project, any problems you had, and to provide suggestions for smoother sailing in the years to come.

Questions

If you have questions about your project, don’t hesitate to get in touch with your mentor(s). If you are unable to get in touch with your mentor(s) over an extended period of time (four or five days; no longer than a week), or are unable to reach them in an urgent situation, get in touch with Anthony Sorace, the project administrator (anothy_x on IRC, anothy@gmail.com via email) or any other mentor who is immediately available.

Working With Plan 9

Plan 9 is not like any other modern operating system you have used. Learning how to use a different operating system is not too different from learning a new skill or language, and the same amount of perseverence and patience is required. The best thing to do before diving into Plan 9 is to accept that you will be learning new things (and not just re-learning things you already knew).

Installing Plan 9

Our hardware support is somewhat lacking. We run on many modern machines, but we do not have drivers for some of the ‘‘newest and coolest’’ devices. The best way to run Plan 9 when learning is by installing it in a virtual machine. The installation process itself is not complicated, but does take quite some time. For this reason, we are providing a new, preinstalled qemu disk image. While Plan 9 does come pre-installed on this machine, there are still plenty of useful administration tasks to learn, all of which will help familiarize you with the system.

The disk image is available at http://206.71.190.158/~dho/plan9-qemu.qcow.bz2. To boot Plan 9 in qemu, simply run: qemu -hda plan9-qemu.qcow -boot c -std-vga.

To become familiar with the Plan 9 operating system, you should install the qemu image and begin becoming acquainted with the system. A good first project to undertake is to configure your qemu VM to be a standalone CPU server. Instructions for configuring Plan 9 as a CPU server are available at http://www.plan9.bell-labs.com/wiki/plan9/configuring_a_standalone_cpu_server/index.html.

Plan 9 Resources

- The Wiki: http://www.plan9.bell-labs.com/wiki/plan9/

- Sources: http://plan9.bell-labs.com/sources/

- 9fans: http://mail.9fans.net/listinfo/9fans

- Plan 9 papers and documents: http://doc.cat-v.org/plan_9/

- Inferno papers and documents: http://doc.cat-v.org/inferno/

- Man pages: http://man.cat-v.org/

Working With Inferno

Inferno is an operating system designed to function well for implementing distributed systems. Mechiel Lukkien has created a wonderful introductory guide for Inferno at http://www.xs4all.nl/~mechiel/inferno/getting-started.html.

2009 GSoC Update: Accepted Projects (2009/04/20)

Google has officially released the list of accepted candidates per organization. We were lucky enough to receive all 7 slots we asked for. It was a difficult choice; we had many good proposals, several for the same project, and it wasn’t terribly easy for us to pick from them. (Maybe we will get more in the future!)

The list of accepted projects for 2009’s Google Summer of Code program (in no particular order):

- Iruatã Martins dos Santos Souza: Sending 9load to /dev/null. Your mentor is Ron Minnich.

- Alex Shinn: Scheme for Plan 9. Your mentor is Charles Forsyth.

- Priyanka Sharma: Glendix: Implement Per Process Namespaces. Your mentor is Anant Narayanan.

- Gabriel Díaz Lopez de la Llave: Mailer (upas) Replacement or Upgrades. Your mentor is Erik Quanstrom.

- André Günther: Port vx32 and 9vx to Windows. Your mentor is Russ Cox.

- Manuel Franceschini: Firewalling / Packet Filtering in Plan 9. Your mentor is Devon H. O'Dell.

- Manzurjon Mukhitdinov: GitFS. Your mentor is Roman Shaposhnik.

Congratulations again to everyone who was accepted. You should contact your mentor as soon as possible to get acquainted. I will be sending a mailing out in the next couple of days detailing some of our expectations for this year’s program, as well as containing some first step-style exercises to get you acquainted with Plan 9.

To those of you who were not accepted into this year’s program: we’re sorry that you didn’t make it, and believe me, some of the picking and choosing was very, very difficult. We’d still love to have you as active members of our project. We’re still around on 9fans, here at cat-v.org, and on #plan9 on Freenode.

Thanks again to all applicants and mentors!

–dho

2009 GSoC Update (2009/03/25)

Plan 9 in the 2009 GSoC

Firstly, I’d like to thank Anthony Sorace for investing the time and energy required to get Plan 9 into the Summer of Code program for 2009. His application was a success and we’ll be working hard this year to make the 2009 Summer of Code program a huge success for Plan 9.

If (by some stretch) you are a capable Plan 9 developer who is not yet aware of that, we can always use more mentors. Get in touch with Anthony via the plan9-gsoc mailing list.

If you’re a student, we’ve got a plethora of great projects for you – check out http://gsoc.cat-v.org/ideas/ for these. As usual, we’re always willing to hear your ideas, make suggestions, and help you with your proposal. Outside of the plan9-gsoc mailing list, many of us are also on IRC: #plan9-gsoc and #plan9 on irc.freenode.net.

I wish to extend a warm welcome to all interested participants, a huge thanks to everyone who is helping out with the program as an administrator or mentor, and hope that we can get a bunch of cool stuff done this summer.

–dho

Good News (2007/11/10)

Oi

I haven’t had much time for hacking on o9fs. The university takes most of my time. But that’s not what the post is all about as one can presume… The good news is that there are two people testing and reporting bugs with o9fs which is something every project needs since many times it is not possible for the author to find every possible problem because of, besides from other things, his/her own limited test environment. So, thanks to Matthias Bauer and Christian Kellermann, the project is again quite active.

iru

Final Update (2007/09/25)

I don’t know if anyone is still reading this blog, but I wanted to write about the outcome of my project, how GSoC went for me and my future plans related to the project.

In case anyone reading this didn’t know, I was working on Inferno authentication, in particular the SPKI infrastructure for Inferno. I completed the project successfully, and produced the following:

- An implementation of Inferno authentication for Plan 9 and p9p

- A SPKI verifier which can produce Inferno certificates

- A SPKI version of keyfs which stores keys and certificates securely,

and allows these to be queried

- A command which creates SPKI certificates to form part of a chain of

delegation of authority

- I also adapted a program written by my mentor Charles to create a

module which performs SPKI reduction.

I’d like to say how GSoC was from the perspective of someone who was never involved in open source development before. In general, I felt that GSoC went well for me. I started off slowly because I found it very difficult to become familiar with Plan 9 and Inferno so quickly since I’d never heard of them until earlier this year. I think this was the main thing I’d change - if I did this again I’d try my best to prepare more. I also should have asked my mentor more questions - at first I was afraid to ask many things in case the questions were stupid. On the other hand, after a few weeks I started working a lot more quickly and I really learned a huge amount during the project, not just about Plan 9/Inferno but also about development and managing a project in general. Overall, I think I produced more useful code than I had expected at the start.

In future, I intend to continue to work on improving or fixing the code I have written this summer, if necessary. However, I also want to become actively involved in the development of Plan 9 and Inferno, and contribute as much as possible to these projects. This includes both work related to my project and also totally different work, since I have a lot of ideas. I guess I’ll post to the relevant mailing lists when I want to work on something.

Manual pages (2007/08/31)

manual pages

yesterday i wrote the missing manual pages for the rabin and vac library, and also added some documentation to the venti library. it can also be found in the mercurial repository of course.

Say Hello to Angled 0.1 (2007/08/22)

I feel I’m in the seventh heaven. After a few sleepless nights struggling with Mozilla’s XPCOM, I finally got the 9P Firefox plugin to work.

The plugin is called Angled (an anagram of Glenda, the Plan 9 bunny) and is in a pretty simplistic state right now: you can read any files served by 9P right in your browser window. Let’s take a step by step look.

First I startup Inferno to start a 9P server. ${INFERNO}/usr/anant/home is symlinked to my actual home directory, /Users/anant:

[theghost anant]$ emu

; runas nobody {listen -A tcp!localhost!1564 {export /usr/anant/home &}}

Let’s see what files are actually there:

[theghost web9]$ pwd

/Users/anant/Plan9/web9

[theghost web9]$ ls

README TODO js9p php9p

Alright, I open my browser window and type ‘ninep://localhost!1564/Plan9/web9/README’ into the address bar. I could also say ‘tcp!localhost!1564′, but TCP is the only protocol available for Angled, so it would be redundant. Now, for the goodies: Screenshots!

Cool! But wait, Angled also displays binary files right in the browser. There’s a catch though, it will only work for binary files that can be viewed directly in the browser window. Certain types of files (.doc for example) do trigger a download request, but then become corrupted for some reason.

[theghost content]$ pwd

/Users/anant/Plan9/web9/js9p/angled/content

[theghost content]$ ls

angled.png firefoxOverlay.xul glenda-error.png overlay.js

Let’s say I want to view angled.png. Here’s what I get:

Okay, but what if you type in a URL that points to an invalid file? Check this out:

Sweet! I’m yet to figure out how to transmit the exact error message to that page, so you’ll have to make do with that generic image for now.

Okay, now onto the bad parts. Angled doesn’t support authentication yet (although the base JS implementation is capable of generating and parsing auth messages). Next, you won’t get directory listings (you’ll get a bunch of binary gibberish which is actually Rstat messages for the directory’s contents). Also, I’m doing the 9P connection and transactions in a blocking thread, so the UI freezes while all that is done. I couldn’t feel the difference since I was testing on my local 9P server, but connecting to remote 9P servers won’t be a pleasant experience. The solution to this is to create a custom nsIChannel implementation, which is a lot of work… I’ll do it when I get to it ![]()

Enjoy!

Ventisrv fileformat (2007/08/20)

Ventisrv file format

A few minutes ago I added a document to the ventivac hg repository (doc/ventisrv-fileformat.ms, a troff -ms file) about the file format used by ventisrv. The format is not very complex, the description should be enough to recover data in case of problems (such as disk failures, or bugs–though note that ventisrv will only ever append data to the index/data file, never overwrite it). I also made the file available as ventisrv-fileformat.ps (original postscript) and ventisrv-fileformat.pdf (converted to pdf).

Now getting back to finishing the last bits!

The end (2007/08/20)

The QEMU-on-Plan 9 strategy paper has morphed, growing a lot more discussion of QEMU internals. The latest version is available here.

Changes to libdynld are complete. The tarball is here. Some additional commentary is available here.

FP Final Update (2007/08/20)

I have done a snapshot of my code in a tar.gz file, I don't know how to upload this snapshot so I have copied this to my home dir in gsoc.cat-v.org.

Finally I have not success with the planning I'd done. Actually the plugin loads and runs libinit and emuinit, but fails while running disinit. I have translated the thread management to pthread, and the problem happens about the exception and error handler, I have problems when calling waserror(), I've seen in the IE plugin that it changes the exception handler, I think that I might do the same but I'm not sure about it.

The error that I had with the free calls is solved, I was using the __clone proc to create threads, now I use pthread_create and it seems to solve the problem, now I have core dumps of the errors and I can use it to solve the problems.

About the other goals of the project the status is:

- Dis files to execute: It’s easy to transfer files from Mozilla to the plugin, so I can use it to load a dis file into the plugin and run it, I don’t know if I can use the dis file as the imod parameter of the emuinit in order to execute this. I have test this with others files like jpgs in order to load the image in the plugin window.

- Keyboard: I know how to use the keyboard input, only I have to midify the readkbd function to let the firefox-os.c manage the keyboard input.

Graphics: The plugins uses X11 to manage graphics, so I can use the same graphics management of Linux emu.

I will continue with the project development till the end regardless of the final evaluation of the gsoc.

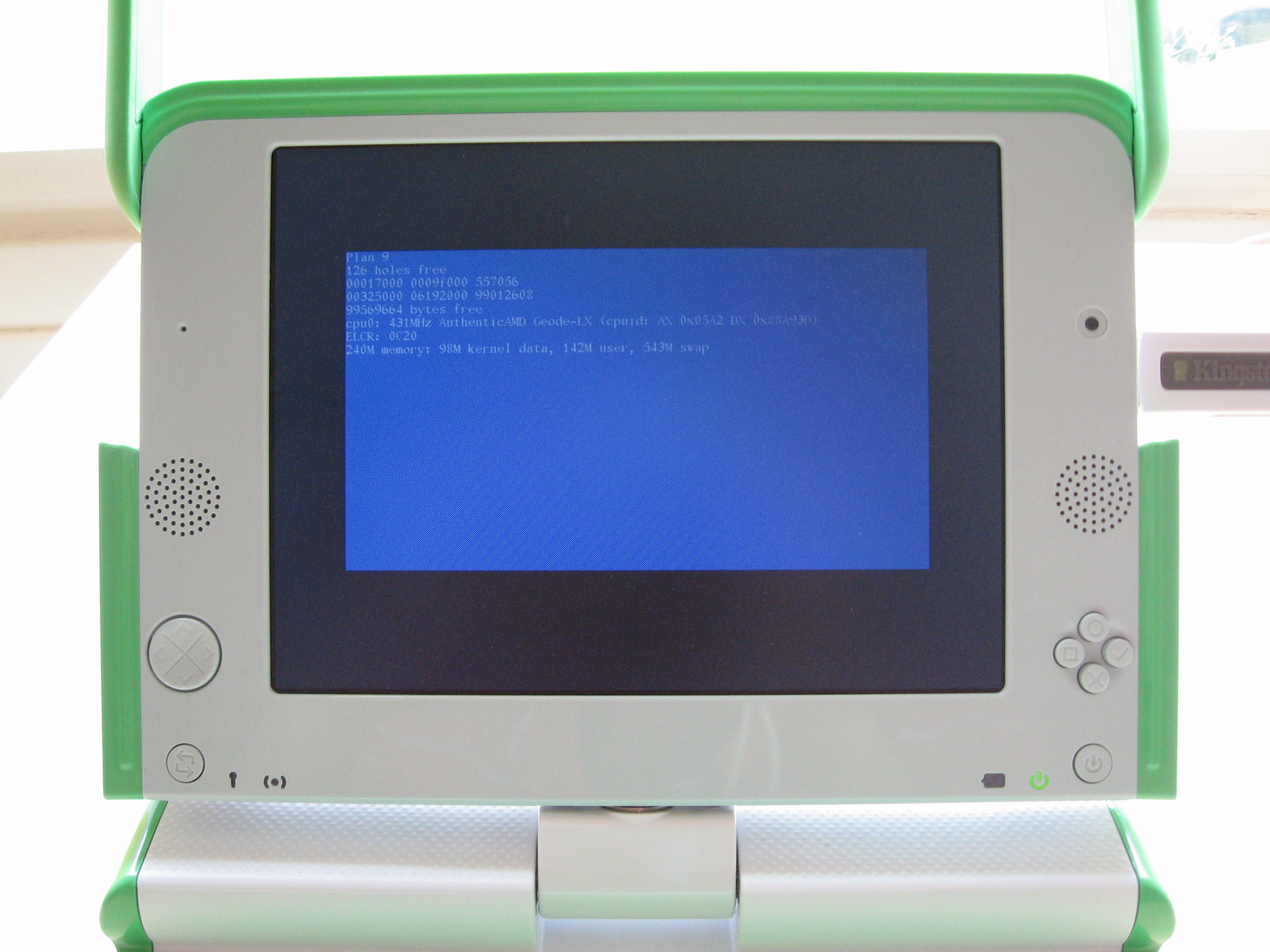

Plan9 Kernel Booting on OLPC (2007/08/19)

Finally we have Plan9 kernel up and running on OLPC ! Here is the screen shot

Implementing a Protocol in Mozilla (2007/08/18)

Creating a Firefox extension is nothing short of an adventure. I was able to get started pretty quickly, thanks to this web-based quick-start wizard, all the boilerplate code was generated in literally no time.

Now, onto the actual functionality of the extension. I have to implement a protocol handler for the 9P protocol, which essentially means you type in “ninep://sources.cs.bell-labs.com/” and start reading files right off the browser window. (ninep:// because a URL can’t start with a number)

This page provides some useful insights and code snippets on the subject of adding a new protocol handler. I was able to get as far as displaying a Glenda image whenever you type in a URL beginning with ‘ninep’.

The way this works is you create an XPCOM component that implements a standard interface. Specifically, the newChannel() method is where all the action is. It receives a URL and you do something and return an nsIChannel. Mozilla provides standard nsIChannel implementations for popular protocols such as http, ftp and even the ubiquitous file://.

The intuitive thing to do here would be to do all my 9P processing in the newChannel() implementation and return a stream in a standard channel. However, that’s not going to work, since newChannel() would then block and the UI would actually freeze until the 9P transaction completes. Sub-optimal.

The “proper” way to do this would be to create my own implementation of nsIChannel. That way I just create a new nsIChannel in newChannel() and be on my way. nsIChannel would then take care of firing callbacks as and when data arrives. There’s somewhere I can start with, and that’s the Mozilla implementation of the finger protocol. It’s written in C++, however, and I need to figure out how I can map the same to JavaScript (via XPConnect).

Fixing OLPC Crash Problem (2007/08/16)

Today I fixed OLPC crash problem. Problem is with lowraminit() function in memory.c Plan9 reads BIOS data area to figure out free memory below 640 K. But since OLPC doesn’t have a PC BIOS, I have modified the OLPC loader to write to BIOS data area that 639 KB is free.

Also I have updated x86amd table in devarch.c I have added Geode-LX processor entry to this table. So that Plan9 will be able to detect Geode processor correctly.

To block or not to block (2007/08/16)

There’s one last hurdle before I finish my GSoC project. I’ve already written the JavaScript that produces binary messages for every possible 9P transaction, and all that needs to be done is to actually send those messages to the 9P server.

In a few of my previous posts, I mention that there were two apparent ways to do this:

a) Send the message wrapped in an XMLHttpRequest to a HTTP server that forwards the message to the actual 9P server.

b) Use Mozilla’s XPCOM components to access Sockets directly in JavaScript.

Well, it turns out that (a) is probably not a solution at all. HTTP is far from what one would call a protocol that supports streaming. A 9P server (correctly) doesn’t return an EOF until you have completed your whole session. So the first time I send an XMLHttpRequest to, say a PHP script, the script blocks forever, since PHP would never know when the first R-message has been actually sent through. I can always peek at the first 4 bytes and find out the length of the R-message, but what then? I can’t close the socket since that would terminate the 9P session, but I have to return from the script for the HTTP response to be actually sent.

PHP doesn’t support threading (proper) so I can’t do the select() mojo either. How about storing the socket FD in the session variable? Well, this is probably the closest to a good solution but that would limit every client to exactly one 9P session.

Although Mozilla’s XPCOM is one hell of a beast, I think it might be good to just build a firefox extension to access 9P resources. Not exactly sure of how I’m going to make it work, but tentatively, I’m thinking of parsing URIs beginning with 9p:// or something like that. Let’s see how this goes.

Automated boot script for Plan9 Kernel (2007/08/16)

I have created a small forth boot script to automate booting of plan9 kernel on OLPC. You need to create /boot directory on USB thumb drive and copy olpc.fth to /boot.

Once open firmware sees disk:/boot/olpc.fth file it will execute this script as part of its automated boot sequence.

speaksfor (2007/08/15)

Today I completed the speaksfor program. This is an Inferno command which will be used to create a SPKI certificate which forms part of a SPKI “speaksfor” chain of delegation of authority. It works like this: the command can be invoked as

speaksfor S I [T] [V]

where S is the Subject, I is the Issuer, T is an optional tag and V is the optional validity of the certificate. Then a certificate is created which states that Subject S may now speak on behalf of Issuer I regarding the things in the tag T (by default the Subject may speak for I regarding everything). In other words, I delegates part of its authority to S.

In practical terms, currently S is the name of a public key stored in keyfs which I spoke about last time, and I is a public/private key pair read from a file. The Issuer I then signs the certificate with its private key. Although it isn’t essential for my project, I’d like to extend speaksfor so that it can also produce name certificates, which would be used to verify that a user is a member of a group.

As for what I have left to do, there are several things. Right now I’m working on a command that does SPKI reduction. I’ll write about that next time as I only just started this. I also have a couple of minor bugs to fix and man pages to write.

mset (2007/08/15)

Just checked in mset, nothing to do really with gsoc, just a quick little mandelbrot set viewer I wrote while learning limbo earlier this year. At the most, it might be useful as yet another tk/graphics example, but it’s fun to play around. Once I get some spare time I’d like to add some color cycling for fun, some text elements to display the cursor position on the complex plane and the generation of the julia set for a given point on the plane.

Update (2007/08/14)

Long time, no update. Sorry about that.

The QEMU-on-Plan 9 strategy paper has morphed, growing a lot more discussion of QEMU internals. The latest version, which is still a draft (and therefore contains some FIXME notes, sorry) is available here.

Changes to libdynld are complete. The tarball is here. Some additional commentary on these changes is forthcoming.

With all of this in place, and QEMU’s 386 target micro-ops library compiling, my dyngen can emit the right kind of code for the dynamic translator. Some comparison is available here.

OLPC plan9 kernel Changes (2007/08/14)

Following files are added to /sys/src/9/pc/ which add EGA emulation on top of OLPC linear frame buffer.

font_sun12x22.h

lfbgeometry.h

lfbega.c

olpc-egainit.c

olpc-egalib.c

olpc-ega.h

Following files are modified :

l.s - Here we need to make a new 4 MB page table entry for

mapping OLPC’s linear framebuffer. Also instead of calling main, olpc_display_init is called which prints Plan9 on screen using

EGA emulation code. After that HLT is called so that machine

won’t reboot.

mkfile - Added entries for new EGA code.

Fiddling with Binary in JavaScript (2007/08/13)

Since ordinary JavaScript cannot directly communicate with a 9P server (over TCP), we decided to go in for a 2-tier approach: A script on the client generates a 9P message which is sent to a server via an XMLHttpRequest. The server then forwards the message to the actual 9P server. Messages from the 9P server to the client are sent in a similar fashion.

All 9P messages are just binary sequences, which means I need some way of representing a 9P message in JavaScript. A character is always 1 byte, so representing characters is not a problem. For representing integers in binary I use the following snippet of code:

while(num) {

str[str.length] = String.fromCharCode(num % 256);

num = Math.floor(num / 256);

}

where ‘num’ is the number to be encoded, and str is returned as: str + join(“”).

This means I can now encode any 9P message as a simple sequence of JavaScript strings. The final string can then be sent along with the payload of an XMLHttpRequest. I’m wondering whether it will be a good idea to encode the string in Base64 first, although an XMLHttpRequest should have no trouble with the string representation either.

FP update (2007/08/13)

Process management is all right. I have used pthreads in order to execute inferno’s main in a thread while the main thread still runs as a plugin. Emuinit loads, but Firefox crashes when the ksetenv function is called, now I have comment the calls to ksetenv. A proc is created to execute disinit, I’m trying to trace if this disinit is running ok. Kproc works and everything seems to be ok but ksetenvs.

Then I will work in the console, I want to let emu paint the console in the firefox window (using X11), I hope to achieve it tomorrow.

FIREFOX PLUGIN UPDATE (2007/08/12)

Inferno Plugin update

I have had many problems with the memory management due to the fact that the plugin is loaded as a shared library. This implies that when the inferno code calls to malloc/free/realloc it calls the malloc/free/realloc functions from libc instead the malloc/free/realloc functions defined in alloc.c. This happens because the plugin is a shared library, and when the inferno code calls malloc, it finds the libc malloc before the inferno’s malloc redefinition, idem with free and realloc.

In order to solve this issue I renamed malloc, free and realloc from alloc.c to imalloc, ifree and irealloc, writing the preprocessor directives in fns.h:

#define malloc(s) imalloc(s)

#define free(l) ifree(l)

#define realloc(v,s) irealloc(v,s)

But this result in another problem, there are calls to ifree where its argument is not a memory reference reserved by imalloc, and when it tries to free this memory an “not in pools” error ocurrs. In order to find where there is ifree calls to free memory reserved by libc malloc instead inferno imalloc I have used the ElectricFence library (thanks Chris), putting it in the LD_PRELOAD path so any call to malloc/free will call the malloc/free of EF, this way I have found (after many problems) the ifree call culprit of the problem. This call was inside the kstrdup function, the above mentioned function is that:

void

kstrdup(char **p, char *s)

{

int n;

char *t, *prev;

n = strlen(s)+1;

t = kmalloc(n);

if(t == nil)

panic("kstrdup: no memory");

memmove(t, s, n);

prev = *p;

*p = t;

free(prev);

}

You can notice that at the end of the kstrdup function it calls to free(prev) (that finally is ifree(prev)), where ‘prev’ contains the last content of the reference that now references to the string copy ’s'. The problems comes when the above mentioned pointer has not been initialized and does not contain memory reserved by imalloc but libc malloc. This happens with the string ‘eve’ which is initialized in main() (main.c) by calling strdup (libc), internally strdup calls libc malloc, then eve’s memory has been reserved by glib malloc not inferno’s imalloc, nevertheless, in libinit() eve’s content is changed by calling kstrdup(eve,“newstring”), and when it tries to free the previous content of eve it try to free it calling ifree (when it was reserved by libc’s malloc not inferno malloc).

To solve this issue I have wrote a strdup redefinition named istrdup that does the same that standard strdup but calling imalloc instead libc’s malloc.

In addition, inferno code calls to sbrk to get for memory from the host OS to allow that inferno manages this memory. To be able to reserve memory acting as a plugin it is necessary to to call to NPN_MemAlloc() (Mozilla NPAPI) so I have added a redefinition of sbrk named isbrk that gives memory to inferno using Mozilla’s API. In order to let the plugin use istrdup/isbrk instead of strdup/sbrk of libc I have added other directives to fns.h:

#define strdup(s) istrdup(s)

#define sbrk(s) isbrk(s)

With this the problem of the memory management seems to be solved (for the present).

Process Management

Mozilla Firefox API for Plugins does not offer functions to create and manage process or threads, so it is possible to use directly the same calls for the process creation in Linux. Nowadays I have tried to create processes with kproc and it seems that everything is alright.

Nowadays Status

The problem that I have now is with the calls to ksetenv, when ksetenv is called in emuinit Firefox crash, and the only thing that says is “Firefox: Unknown error 2988458393”, I have traced the calls and it seems that ksetenv (env.c) calls to namec() (chan.c), the flow enters the switch(amode) statement and go to the branch ‘Acreate’ where it calls to walk() and gives the error.

If I comment the ksetenv call, then emuinit runs without problems, but emuinit is called from libinit by:

executeonnewstack(tos, emuinit, imod);

But after this statement nothing statement is executed, so the ‘for’ iteration of the end of emuinit will keep the control of the main plugin process, and then the plugin does not respond neither offer the NPP functins that Mozilla needs. Then the plugin does not respond to the browser requests and finally crash. The plugin must offer many NPP functions in order to comunicate with Firefox, one of these function is NPP_Init where the plugin initialices and where I have added the call to ipluginmain() (firefox-os.c) that is the entry point to inferno from the plugin.

Maybe a possible solution to this is to create a new independent process where execute ipluginmain while the main process remains running and receiving browser requests…

Planning:

I have this aims:

- Memory initialization

- Threads/proccess initialization

- console

- “Hello world”

- text input (console, but keyboard)

- graphics and mouse events

- cleaning

Memory initialization semms to be solved, process initialization seems to work but it could cause problems, emuinit does not load right due to the ksetenv problem, when I solve this I hope to have an initialized and running inferno.

The next steps are:

Console: Paint the console (emu running) -> On August 14 Hello Worlds: execute a simple “hello world” within the console -> On August 15 Text Input by keyboard to feed the console -> On August 17 Paint graphics and handle mouse events -> On August 22 Clean the code -> On August 25

Please any comments will be welcome.

Thanks.

cocytus update (2007/08/11)

Past few days have been fairly unproductive due to having a few orientation days for school. This weekend I plan to devote to gsoc, once I see how much I can get down over the weekend, I’ll have a good idea of what needs to be done/if completing the project is possible/ etc. on Monday. I’ll update over the weekend and then give a detailed post on Monday describing what is done, what needs to be done, and whether or not I think it’s possible to complete.

Booting plan9 on OLPC (2007/08/10)

Finally Plan9 kernel is up and running on OLPC !!

Here is the complete procedure to boot plan9 on OLPC:

You will need a USB thumb drive and OLPC laptop (of course).

Following 3 files are needs, which should be stored on USB drive.

- plan9.ini : plan9 configuration file

- 9pcf : plan9 kernel

- loader.elf : Bootloader which loads plan9 kernerl (9pcf) on OLPC

- plan9.ini : plan9 configuration file

When you start the OLPC laptop, you need to interrupt the boot sequence to go to open firmware (OFW) command prompt. Here is the procedure:

- Hold down the Game key when machine starts.

- When asked (on screen) release the game key, after releasing it,

OFW will start probing for hardware.

- After finishing the probing, OFW will ask you to press Esc key within 3 seconds. Once you press Esc then you will land up to OFW command prompt.

- Hold down the Game key when machine starts.

To check files on attached thumb drive execute following command,

dir disk:\Execute following command to boot loader.elf instead of standard linux kernel.

setenv boot-device disk:\loader.elfExecute following command to keep USB system alive after OFW transfers control to plan9 boot loader.

' noop to go-hookExecute following command to map OLPC’s linear frame buffer to virtual address 0xfd000000.

h# 910 config-l@ dup 100.0000 -1 mmu-mapFinally, we are ready to boot plan9. Here is the final command,

boot

And you should see Plan9 printed on screen !!

Currently it only prints plan9, as there is no proper display driver.

Swapping Endian-ness in JavaScript (2007/08/09)

My mentor came up with two interesting ways of swapping endian-ness on JavaScript. The first one he proposed was based on what was “usually done in Plan 9″, something along the lines of:

b = "\1\1\1\2";

n = (b.charCodeAt(0) & 0xff) << 24;

n += (b.charCodeAt(1) & 0xff) << 16;

n += (b.charCodeAt(2) & 0xff) << 8;

n += (b.charCodeAt(3) & 0xff);

…which gives us n = 16843010.

Maht then had a look at the series of JavaScript lectures by Douglas Crockford at Yahoo!. The first of the series tells us that bit shifting is not faster than simple multiplication. Maht gave me this code snippet doing the same conversion, but using multiplication instead of bit shifts this time:

b = "\1\1\1\2";

n = b.charCodeAt(0) * 16777216;

n += b.charCodeAt(1) * 65536;

n += b.charCodeAt(2) * 256;

n += b.charCodeAt(3) * 1;

n, is of course 16843010; the real question is how much longer (or, shorter) did this take.

Venkman is probably one of the more mature “old-school” JavaScript debuggers out there. FireBug, the relatively modern sibling to Venkman certainly has a few nifty features, but profiling is not one of its strong points. After failing to profile the script in FireBug, I used the trusty old Venkman - and it came up with some interesting results:

Venkman Profile Report

Created ………. Thu Aug 09 2007 20:18:54 GMT+0530 (IST)

User Agent ……. Mozilla/5.0 (Macintosh; U; Intel Mac OS X; en-US; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6

Debugger Version . Venkman 0.9.87 [Mozilla rv:1.8.1.6/2]

..

Function Name: multi (Lines 1 - 6)

Total Calls: 1 (max recurse 0)

Total Time: 0.21 (min/max/avg 0.21/0.21/0.21)

Time (ex. calls): 0.21 (min/max/avg 0.21/0.21/0.21)

..

Function Name: shift (Lines 8 - 13)

Total Calls: 1 (max recurse 0)

Total Time: 0.02 (min/max/avg 0.02/0.02/0.02)

Time (ex. calls): 0.02 (min/max/avg 0.02/0.02/0.02)

As you can tell from the function names, multi uses simple multiplication, while shift uses bit-shifting. Turns out that bit-shifting does indeed take a lot lesser time. A Firefox quirk?

Inferno SPKI (2007/08/08)

This is my first post on here, and I guess it is about time I posted some news on my project. My project involves extending the SPKI infrastructure of Inferno by implementing various file servers and commands. Using SPKI for authentication improves scalability and provides a way to define group membership using the “speaks-for” relationship with SPKI certificates.

The first part of my project was not actually related to SPKI, and involved adding support for Inferno authentication to the factotum of Plan 9 and p9p. I completed a basic implementation of this, although it would benefit from further testing.

I then wrote a SPKI verifier. The verifier is implemented as a file server using file2chan, and accepts a SPKI certificate sequence as input. If the sequence is verified correctly, the verifier then serves a file containing an Inferno certificate which can be used to access services.

Right now I am working on a SPKI version of keyfs for Inferno. This keyfs stores SPKI keys and certificates securely in an encrypted keyfile. It is implemented as a Styx file server which serves three directories within /mnt/keys: pk/, sk/, and cred/, which contain public keys, private keys, and all credentials including certificates respectively. Users can add new keys and certificates and name them by writing an S-expression to the “new” file which is provided.

So, next I will probably be adding more features to the keyfs, and hopefully also beginning work on some other related things. My code can be found at http://code.google.com/p/inferno-spki/.

I am enjoying the project a lot and learning so much, even though I have found it difficult. I will give another update very soon.

Plan9 kernel image format (2007/08/07)

Today I decided to leave plan9 ELF booting effort. Plan9 linker’s ELF output is not correct. This is creating lot of problems.

So now I am going to write custom boot loader for plan9’s kernel.

First thing i need to do is to understand plan9 kernel’s format.

Here is the information that i got from a.out.h:

struct Exec

{

long magic; /* magic number */

long text; /* size of text segment */

long data; /* size of initialized data */

long bss; /* size of uninitialized data */

long syms; /* size of symbol table */

long entry; /* entry point */

long spsz; /* size of pc/sp offset table */

long pcsz; /* size of pc/line number table */

};

I wrote a simple C program to read plan9 kernel image and print above information from it.

Kernel file image size = text + data + syms + spsz + pcsz

Also another important thing that I found out is that, this header is in big endian format, so on x86 architecture we need to swap data to read it correctly.

cocytus update (2007/08/07)

Have implemented some of the data structures…not 100% it has everything needed, but will know as soon as the styx operations are implemented which is the next step. Will begin tomorrow with the fundamental, i.e. read/open/walk. This should give a good feeling for whether or not everything is working as it should and if the data structures are sufficient. Write will then be implemented once I am sure the basics are working properly. A semi-rough timeline would be to get the read/open/walk operations done by Wed-Thurs, then have write working by the end of the week.

Mmu setup problem for kernel startup (2007/08/07)

By default plan9 kernel’s text section starts at 0xF0100020 which is around virtual address 3841 MB. 9load loads kernel at physical address 0x100020, and then kernel sets up correct memory mappings using paging.

For OLPC we are using ELF format. OLPC’s open firmware determines start address of ELF’s txt section from ELF header. If we compile kernel in ELF format and ask linker to link its txt section at 0xF0100020 address, then OFW gives page fault, since this address is not present physically and its not mapped in current page tables.

I am linking ELF kernel at physical address 0x100020. So its loaded correctly by OFW loader. But once control is transferred to plan9 kernel, kernel code expects all address above 0xF0100020. So this is the main problem which needs to be solved.

cocytus update (2007/08/06)

Have the styxserver running, Basically used vacfs as the template for sending and receiving styx messages. Right now is basically just a skeleton for the file server. Am working now on implementing the various operations which will need to be performed on the venti side, i.e. this will be the mapping of the styx messages to the venti operations. Also the data structures invovled are being implemented as well, this step is being done before the operations. The vac and venti libraries contain some basic structures which will be used, but I will need to implement some things ot keep track fo the modified/unmodified states of the blocks.

Rabin fingerprints (2007/08/06)

rabin fingerprinting

I have been a bit quiet, time to bring some news! Quite a bit has happened since the previous post. The most important change is the new rabin fingerprinting code which can now be used by vacput, vacget and vacfs to split blocks not at fixed byte offsets, but at content boundaries. This code for rabin fingerprinting has actually been in the hg repository for some time, but I recently changed a few of the parameters making the block boundaries much better and hadn’t yet explained it. So that’s what is post is about.

the algorithm

The rabin fingerprinting algorithm calculates a rolling checksum over data (a file to store in venti). The window of the data to look at is configurable, but typically a few dozen bytes long. The rabin module will read through a file, and let the window “slide” over the data, recalculating the fingerprint each time when advancing a byte. When the fingerprint assumes a special value, the rabin module considers the corresponding window position to be a boundary. Al data preceding this window position is taken to be a “block” of the file.

Calculating a checksum relative to a previous one is not very expensive cpu-wise. The operations involved are:

- Multiplying the previous checksum by a prime (the prime is a parameter of the algorithm).

- Adding the value of the byte that has just come into the sliding window.

- Subtracting the value of the byte that has just slid out of the window times the nth power of the prime (where n is the width of the sliding window; the 256 possible values are precalculated during module initialisation).

- Taking the modulo of the value by the average desired block size (for now, the average desired block size is required to be a power of two).

- Check whether the new checksum has the special value that makes it a boundary.

This means the algorithm has three parameters: prime, width and modulo. The properties that make this algorithm useful for our purpose is that: 1. they are cheap to calculate; 2. they find the same boundaries, no matter where in the file they occur. Thus, when a byte has been prepended to or inserted into a file, this has no influence on how later blocks are formed, as opposed to the case where all block boundaries are at fixed file offsets.

This implementation also allows you to specify a minimum and maximum block size . If a block boundary occurs before the minimum block size is encountered, it is ignored. If no block boundary occurs before the maximum block size, the window is treated as a block boundary anyway and a new block emitted.

At first I had set the width of the sliding window to three, but after reading through lots of data (my homedir, ~7GB in size) with different values for the parameters, it was clear that a window width of three is too small: too few unique boundary patterns existed, and the ones that did exist were found too often. It has now been set to 31. The tests showed that which prime number to choose did not matter (for the values I tested). The modulo (average block size) is reasonably accurate, but the minimum and maximum block sizes, and the fact that end of files result in short blocks, skew the actual average block size a bit. The special value at which to find boundaries had at first been set to zero: not good, patterns of all zero bytes will be a block boundary this way, causing lots of boundaries on “null” data. Better is to use the modulo-1, which is the value currently being used.

not there yet

Okay, so we can split a file in blocks in another way. Unfortunately, this cannot be integrated into vacput/vacget/vacfs without changing the data structures of venti archives. A hash tree stored in a venti archive needs all its blocks to be of a fixed length, typically 8KB. Vacget/vacfs use this assumption when finding the right block for fulfilling a read for a given offset in a file; they use it to determine which score in a pointer block to follow to get to the data block.

Now consider what happens when a (random) file offset in a vac+rabin fingerprinting archive should be read. When starting at the top pointer block of a hash tree, there is no way of knowing below which score the requested offset resides. Previously it could be calculated using the depth of the tree and the width of the tree branches and leaf size.

To solve this, I have changed the format of the pointer blocks. Each score is now accompanied by the size of the subtree the score references. The size referenced by the top score can be found, as usual, in the Entry data structure (which also contains the top score).

When walking from the top down to some file offset, the sizes of the subtrees allow vacget/vacfs to determine which ranges of the file reside below each score in the pointer block.

more (data structure) changes

The pointer block is not the only thing that has changed. To make sure the two types of data can never be misinterpreted, the new pointer blocks are stored with different “type” numbers in venti: the usual values added to 16; so 19 for depth 0, 20 for depth 1, and so on.

There is a new root block version, version 3. The maximum data block and pointer block size can be left intact (though I an currently writing them with “0” filled in).

The “flags” field in the Entry structure has a new bit set (1<<7) to indicate the entry is has variable-size blocks. In theory venti archives can be made with the two types of entries (variable sized blocks or not) mixed.

more ideas

A few more ideas have crossed my mind:

Currently, the new pointer blocks always hold a fixed number of scores (either to more pointer blocks or to data). When a new block is inserted in an existing hash tree (e.g. when writing a new version of an already existing archive) is split in the new archive, it now changes all pointer blocks to right of it in the hash tree. Since we now have variable block sizes anyway, we could as well grow the current pointer block by another score (unless of course it would grow too large). This would mean fewer pointer blocks need to be written when storing a new version of a file. I am not sure how much it really helps though, and whether the added code complexity is warranted.

Rather simple, but currently the size of a subtree is always stored in 8 bytes. This grows the size of one pointer from 20 bytes (the score size) to 28 bytes. The lowest-level pointer blocks, which point to blocks of at most 32KB, will be the most numerous. Thus, perhaps a variable length encoding of the size could be used. But it probably isn’t worth the trouble.

Should the rabin parameters be configurable, and parametrized into the root block of an archive? Probably not, well-choosen defaults should help. At least changing the prime doesn’t make a difference, the sliding window width should be okay too. Only the average block size may be worth parametrizing, perhaps as “data block size” in the root block. This information is only useful for writing venti archives, so in this case, for writing an archive relative to a previous version.

conclusions

It seems the current rabin fingerprinting code works and can be used to write and read venti archives. I still want to test how fast fingerprinting is (both with and without JIT), and whether not using power of two’s as modulo has a noticeable influence on performance.

I am probably forgetting to explain something, please bug me about it on irc or send me an e-mail.

Faulty Page Fault Handler (2007/08/05)

I'm still puzzled by the “hw tlb loading disabled w/o sw loading available” warning displayed by the simulator. I verified that software TLB management is enabled, and that the correct interrupt handler is invoked on a page fault. Having run out of ideas, I moved on to the next issue. The page fault handler (which eventually causes mmuput() to be called) behaves correctly the first time it's called, that is, a vacant TLB entry is used to map the page, but when the next fault occurs, mmuput() panics as it finds that the virtual page it's trying to map already exists in the TLB, which means one of two things: either the checking used in mmuput() itself is buggy, or that there's inconsistency between the hardware TLB & the STLB cached by the kernel, which is unlikely. My biggest problem now is that I can't even use print() for debugging. mmuput() is extremely sensitive to any modification I make (may be because it's running in an interrupt -- there might be some restrictions that I'm not aware of). For example, this is what I get when I modify the condition used in checking if an entry is duplicated: